Power Analysis by Data Simulation in R - Part IV

Part IV - Mixed Effects Models

Power Analysis by simulation in R for really any design - Part IV

This is the final part of my Power Analysis in R series, focusing on (Generalized) Linear Mixed Effects Models. I assume that, at this point, you already read Parts I to III of this tutorial, if you haven’t I suggest doing so before working through this. As I already mentioned in previous parts, there are good available options for Mixed Model power analysis already, an overview of which can be found in the SOP document of the D2P2 lab. This tutorial however, is meant to give you a deeper understanding of what you are doing when you simulate data and do power analysis by simulation. I hope that the last 3 parts already helped you in achieving this and that this final part will help to generalize this knowledge to mixed-effects models. The good news is that we will actually not be doing a lot of new stuff, the novel part about mixed-effects models compared to the models that we simulated in part III is mostly that we need to use the same methods and add some new things to them (after all this tutorial is about teaching a general skill so it’s good that we can build up on what we learned in the first three parts). Still, there is a lot of ground to cover so we better get started right away.

Mixed-effects models are extremely powerful in that they are incredibly flexible to the data-structure that they can work with, most notably that they:

- They are able to handle between-subject and within-subject effects in the same model along with numeric predictors (covariates).

- They allow for non-independent observations, i.e. observations from the same unit of measurement (e.g. a participant, a school, a country etc.) and even from multiple nested or crossed (see infobox below) units of measurements simultaneously.

A few words on nested vs. crossed random effects

As mixed-effects model literature has unfortunately developed to use different terms to mean different things, I just want to spend a few words on what I mean by nested as opposed to crossed random effects.

A data structure is nested when observations are organized in one or more clusters. The classic example of nested data is that we observe the performance of school-children over time. Because we collect data throughout multiple years, we have multiple observations per child, so we can say that observations are nested within children. We also observe multiple children per class, so children are nested within classes. If we involve not one but multiple schools in our study, then we have children nested in classes nested in schools. If we have schools from multiple countries we have …. well, you get the idea. It is important to realize that nested data is defined by “belong” and “contain” relationships. The data is fully nested if (per reasonable assumption in this case) each child only belongs to one specific class, each class only belongs to one school etc. This point is sometimes confusing as we might, in our data-frame, have multiple classes from different schools that have the same ID in the data-set, for instance there might be a class called 9A in schools 1 and 2. However, it is important to realize that these classes are not the same but different classes in reality. They contain different students and belong to a different school. Therefore the data is nested.

In crossed, or cross-classified data, each observation does also belong to not only 1, but multiple nesting levels. However, the as opposed to fully nested data, the nesting levels are not bounded to each other by a “contain” or “belong” relationship as was the case in the example above where classes contained students and belonged to schools. A classic example of cross-classified data is experimental data in which, for instance, people had to press a button quickly whenever pictures appear on a computer screen. Imagine that we recruit 100 participants and each sees the same 20 pictures 5 times. In this case we have 100x20x5 = 10,000 observations. Imagine we would identify these observations by their row-number (i.e. 1 … 10,000). Now each of these observations “belongs” to a participant, and “belongs” to a specific stimulus. For instance, assuming that the data is ordered ascending by participant and stimulus, the first observation (observation 1) belongs to participant 1 and stimulus 1, the last observation (observation 10,000) belongs to participant 100 and stimulus 20. As you might already realize, in this case it is not the case that a participant either contains or belongs to a specific stimulus and vice versa: Each participant sees each stimulus, or framed differently, each stimulus presents itself to each participant - the two nesting levels are independent from each other, they are cross-classified.

Building up mixed-effects models

We will approach the simulation of mixed-effects models in a bottom-up approach. We will first start simple with a model that has only a single effect and nesting level and then explore different situations in which there are more fixed and random effects and both. Throughout this tutorial, we will use a new hypothetical research question focused on music preference. The overarching research goal will be to - once and for all - find out whether Rock or Pop music is better. Of course, we could just ask people what they prefer, but we do not want to do that because we want a more objective measure of what is Rock and Pop (people might have different ideas about the genres). Therefore, we will have participants listen to a bunch of different songs that are either from a Spotify “best-of-pop” or “best-of-rock” playlist and have them rate the song on a evaluation scale from 0-100 points. We will start this of really simple and make this design more complex along the way to demonstrate the different aspects that we need to consider when simulating mixed-model data.

Simulating a Within-Subject Effect

As a first demonstration, we will simulate a MEM with only the within-subject effect.

We also will now write a function that allows us to, quite flexibly, simulate various data structures.

No worries, it will make use of things that we already introduced in earlier parts of this tutorial (e.g. rnorm and mvrnorm) so you will hopefully be able to understand what is going on just fine.

To investigate the question stated above, we can use the following design:

- DV : liking of Song (0-100)

- IV (within-subject) : Genre of Song (Rock/Pop)

Note that we made the design choice that genre will be a within-subject effect. In theory, it could also be a between-subject design where a person listens to only 1 genre, pop or rock, but a within-subject design has the advantage that general liking of music across conditions (e.g. person 1 might like both genres more than person 2) does not affect the results (as much) as it would in a between-subject design.

I say “as much” because it could still affect the results even in a within subject design if, for instance, a person that likes music generally more will also show fewer difference between conditions compared to a person that dislikes music more generally. In that case, the overall score is related to the difference score which can impact our inference.

The first thing that we need to do is not entirely new for us at this point.

We will use the rnorm function to generate the scores for the 2 conditions.

However, we will now follow a slightly different approach where we will not create the 2 groups’ scores directly (i.e. use rnorm() for each group separately), but will instead only generate the design matrix of the data, i.e. the structure of the data without having any actual scores for the dependent variable in there.

Eventually we will then add the DV in a second step by calculating it’s value for each row in the data-set based on the values for the other variables in that row.

However, before we will use the “old” approach one last time because it allows us to explore some important insights.

Generating a design-matrix with 1 within-subject factor

As this is the first time we actually write a custom function in this tutorial, I will spend some time to explain.

The following function generate_design will allow us to pass any number of subjects that we would like it to generate.

In the function header, we pass the number of participants n_participants and the number of genres n_genres that our design should have.

Moreover it allows us to specify the number of songs per genre n_songs which at this part does not do anything in the function (we will add this functionality in a minute).

The function then calls expand.grid, a built-in R-function, that will do nothing but create one row in our data-frame for each possible combination of participant-number and genre.

The final line of the function returns this created design matrix whenever we call on the function.

generate_design <- function(n_participants, n_genres, n_songs){

design_matrix <- expand.grid(participant = 1:n_participants, genre = 1:n_genres) # here we create the data-frame

design_matrix$genre <- ifelse(design_matrix$genre ==1, "rock", "pop") # we rename the genres so its easier to follow (this is not really necessary)

return(design_matrix) # return the data-frame

}Lets start slow and first create a data-frame that represents 10 people listening to one song from each genre. Thus, when we have 10 people and 2 genres with 1 song each, there should be 20 rows in our data-frame. Let’s see

data1 <- generate_design(n_participants = 10, n_genres = 2, n_songs = 1)

paged_table(data1)Nice! Indeed our data has 20 rows, and each participant listens to rock and pop once - exactly what we wanted.

Now, as said, we would like to have each person listen to more songs - lets say 20 songs per genre.

The first instinct here could be to add n_songs to the function just as we did add n_genre.

However, this does not work as songs are unique per genre.

That is, while each participant listens to both genres, each song only belongs to one genre.

For instance the specific song “Blank Space by Taylor Swift” only belongs to the “pop” but not the “rock” condition.

Thus, we need to make sure that the songs will have unique identifiers, rather than numbers that will appear in both conditions.

Luckily we can easily fix this:

generate_design <- function(n_participants, n_genres, n_songs){

design_matrix <- expand.grid(participant = 1:n_participants, genre = 1:n_genres, song = 1:n_songs) # adding song to expand.grid

design_matrix$genre <- ifelse(design_matrix$genre ==1, "rock", "pop")

design_matrix$song <- paste0(design_matrix$genre, "_", design_matrix$song)

return(design_matrix)

}As you can see, we just added a slight modification to the function.

We added the song variable to expand.grid and in line 3 of the function we rename the song variable using paste0, a function that will just concatenate strings together.

We use this to create song-names that now include the genre name.

This way, song 1 in the rock-genre will have a different name from song 1 in the pop-genre, telling our model that these are in fact unique songs that cannot be interchanged.

Note that this also means that we will pass the number of songs per genre instead of the total number of songs.

Lets try this out.

If our code functions correctly, we should have 10 participants x2 genres x20 songs per genre = 400 rows in our data frame.

data2 <- generate_design(n_participants = 10, n_genres = 2, n_songs = 20) # note that we pass the number of songs per GENRE

data2 <- data2[order(data2$participant),] # order the data by participant number so its easier to see how its structured

paged_table(data2)Indeed, we get 400 rows and each participant listens to 20 rock songs and 20 pop songs, that all have unique names - neat! Now that we have the design-simulation out of the way, let us give scores to the songs. We first will do this with only 1 song per person per genre (that is the same across participants) to keep the model simple first.

Revisiting the old simulation approach once more

As mentioned our DV is the liking of songs on a 100-point scale.

Let us assume that, in the population, both genres are liked equally and that people also in general rather like music. We are pretty sure that people like music compared to disliking it, so we are pretty sure the population mean will not be smaller than 50 and we can be 100% sure it will not be larger than 100. Using the 2SD rule from earlier parts of the tutorial as a standard to put our assumed means into numbers we would therefore use a normal distribution with a mean 0f 75 and an SD of 7.5 for both groups. As we are working with a within-subject design, we would also assume the two distributions to be correlated in some way. For example, we could assume that the scores are positively correlated, meaning that a person with a relatively higher liking for rock, will also, in general, like pop music more and vice versa.

As a side note: We are working with a bounded scale here, where scores cannot be smaller than 0 or larger than 100. As normal distributions are actually unbounded, they do not perfectly represent this situation, and we might get scores larger than the boundaries. There are different ways we could deal with this.

First, we could specify the boundaries by censoring the normal distribution. This means we would set each score falls outside the scores to either the minimum or maximum of the scale. This will result in scores that only fall inside of the specified range, but will result in peaks at both ends of the scale, as we are keeping the scores.

We could also truncate the distribution, i.e. throw away all scores that do not fall inside our specified range. This will not result in peaks at the scale boundaries, but will however, result in data loss, that we would either have to accept or compensate for by resampling until we get scores inside the range. Both approaches have there advantages and disadvantages, and the question which one we should use boils down to what we believe will happen in our study: Will people overproportionally give very low or high ratings (i.e. people really HATING or LOVING songs)?

In that case we would expect peaks at the boundaries and might prefer censoring the normal distribution as it might be closer to what we will observe in our study. If we do not expect that and mainly care about keeping the scores inside the range, we could instead use truncation. Here, we will just ignore this problem for now (which is which is not that problematic after all as the underlying process that generates the data is the same normal distribution in all cases).Before, we simulated a situation like this by specifying the group means that we would expect and the correlation between the group means that we expect using the mvrnorm function (if you do not know exactly know what this code means consider revisiting part II of this tutorial):

require(MASS) # load MASS package## Loading required package: MASSgroup_means <- c(rock = 75,pop = 75) # define means of both genres in a vector

rock_sd <- 7.5 # define sd of rock music

pop_sd <- 7.5 # define sd of pop music

correlation <- 0.2 # define their correlation

sigma <- matrix(c(rock_sd^2, rock_sd*pop_sd*correlation, rock_sd*pop_sd*correlation, pop_sd^2), ncol = 2) # define variance-covariance matrix

set.seed(1)

bivnorm <- data.frame(mvrnorm(nrow(data1)/2, group_means, sigma)) # simulate bivariate normal (we use nrow(data1)/2, the number of rows from the data-set above to simulate 10 observations per group)

par(mfrow=c(1,2))

hist(bivnorm$rock, main = "liking of rock music", xlab = "")

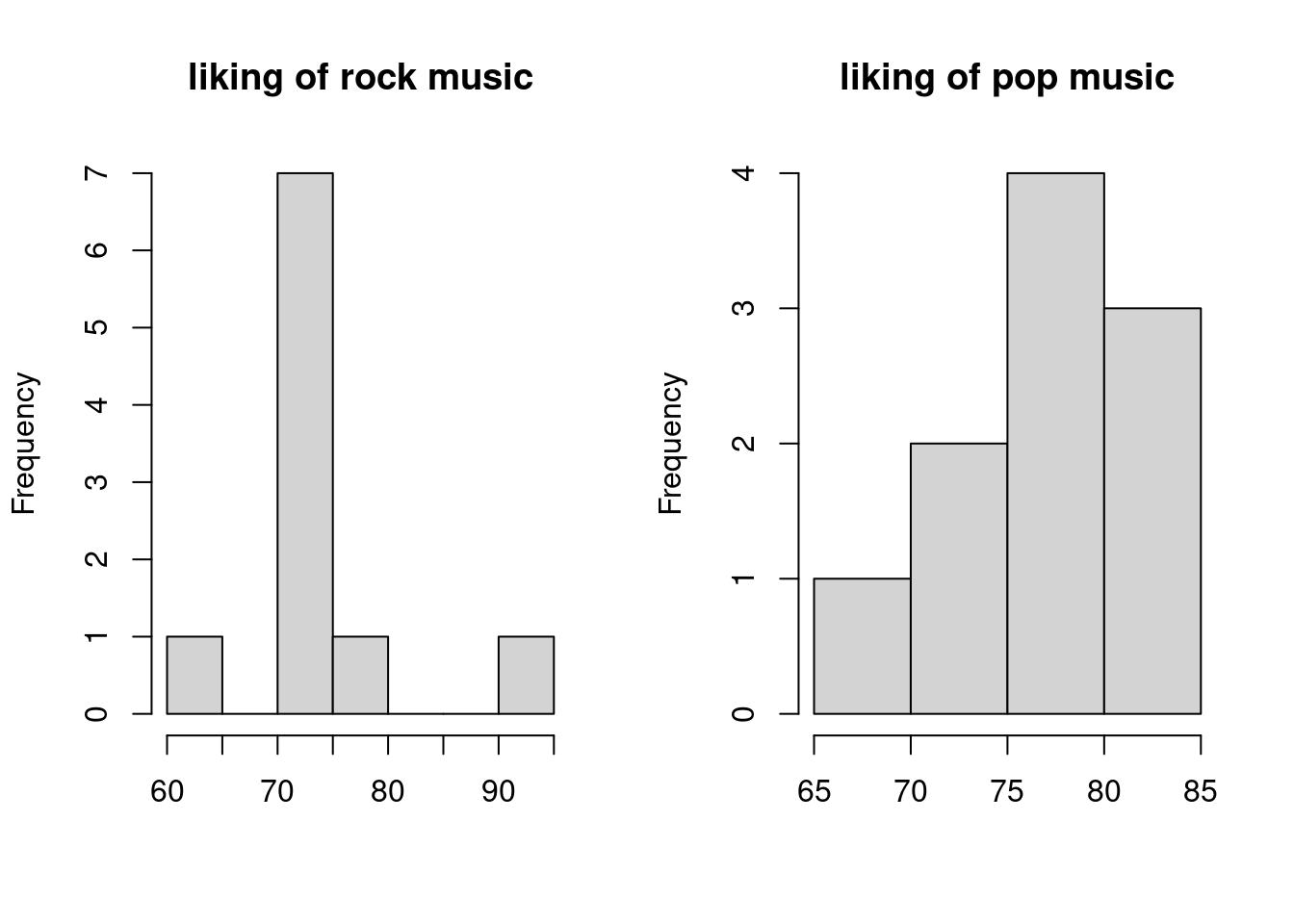

hist(bivnorm$pop, main = "liking of pop music", xlab = "") The above histograms show how our simulation with 10 participants would turn out.

As we would expect, with 10 participants the histograms for each group look quite wacky.

The above histograms show how our simulation with 10 participants would turn out.

As we would expect, with 10 participants the histograms for each group look quite wacky.

Previously, we analyzed these data with a paired t-test, for example:

t.test(bivnorm$rock, bivnorm$pop, paired = T)##

## Paired t-test

##

## data: bivnorm$rock and bivnorm$pop

## t = -0.73577, df = 9, p-value = 0.4806

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -9.618983 4.897481

## sample estimates:

## mean of the differences

## -2.360751showing us that there is no difference between the groups, just as we would expect. We could of course also model the same data in a mixed-effects model (we have seen in part II and III of this tutorial how t-tests can be rewritten as linear models).

So lets try this, here comes our first mixed model simulation! However, let us first increase the sample-size to very high so I can demonstrate some important things that we do not want to get distorted by sampling variation in a small sample-size by now. So instead of 10 people let us simulate the same data but with 10,000 people.

data1 <- generate_design(10000, 2, 1)

group_means <- c(rock = 75,pop = 75) # define means of both genres in a vector

rock_sd <- 7.5 # define sd of rock music

pop_sd <- 7.5 # define sd of pop music

correlation <- 0.2 # define their correlation

sigma <- matrix(c(rock_sd^2, rock_sd*pop_sd*correlation, rock_sd*pop_sd*correlation, pop_sd^2), ncol = 2) # define variance-covariance matrix

set.seed(123)

bivnorm <- data.frame(mvrnorm(nrow(data1)/2, group_means, sigma)) # simulate bivariate normal (we use nrow(data1)/2, the number of rows from the data-set above to simulate 10 observations per group)

par(mfrow=c(1,2))

hist(bivnorm$rock, main = "liking of rock music", xlab = "")

hist(bivnorm$pop, main = "liking of pop music", xlab = "")

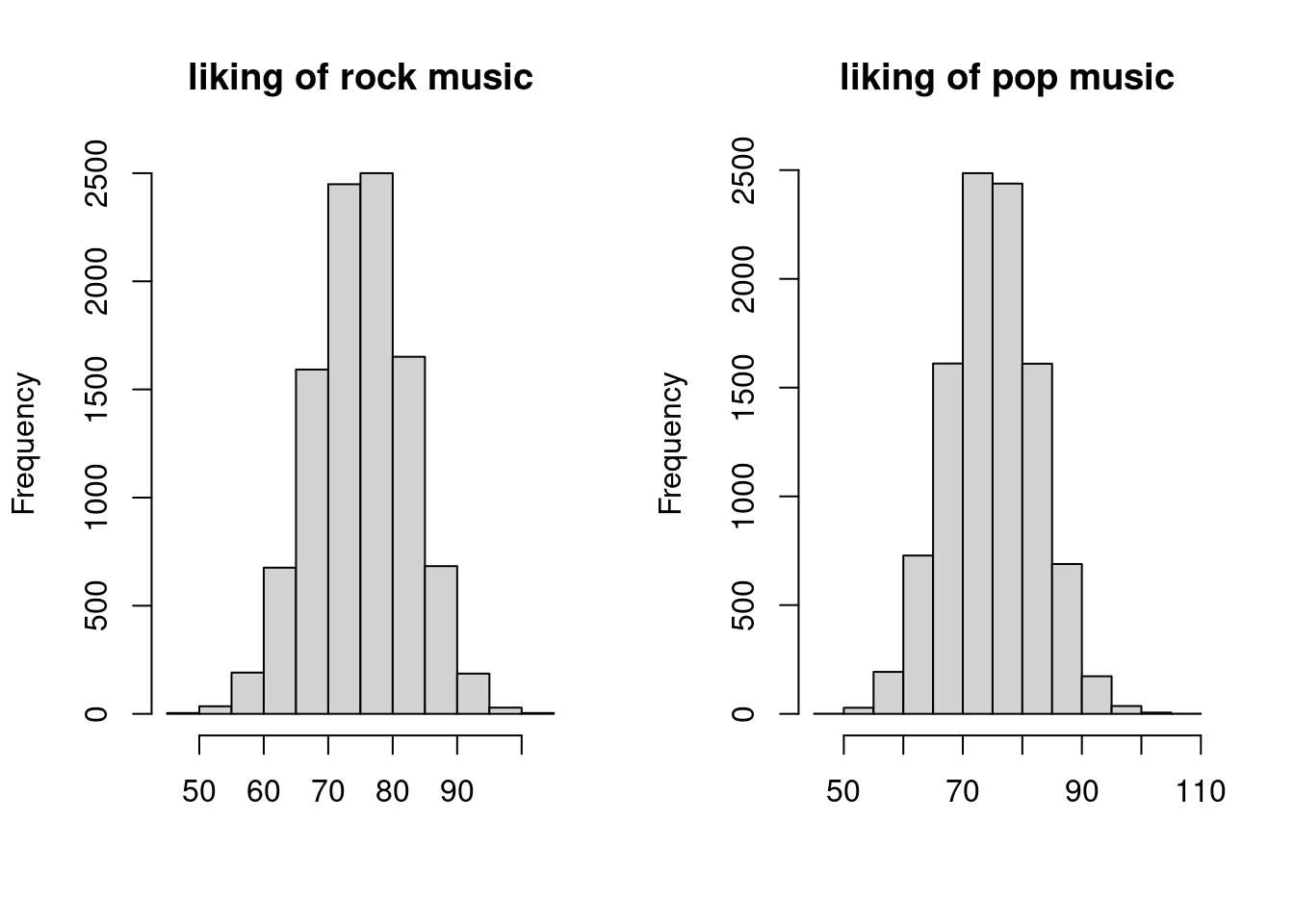

We can already see that the histograms look much more normal now. So let us redo the t-test.

t.test(bivnorm$rock, bivnorm$pop, paired = T) ##

## Paired t-test

##

## data: bivnorm$rock and bivnorm$pop

## t = 0.90922, df = 9999, p-value = 0.3633

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -0.09986109 0.27264389

## sample estimates:

## mean of the differences

## 0.0863914We can immediately see that the difference between groups got smaller, and the confidence interval is more narrow, showing us that we have a much more accurate simulation now.

So now to the mixed model.

First we have to restructure the above data as lme4::lmer uses long-format data, while the bivnorm data that we have has columns for the observations.

bivnorm_dat <- data.frame(cbind(liking = c(bivnorm$rock, bivnorm$pop), genre = c(rep("rock", (nrow(data1)/2)), rep("pop", (nrow(data1)/2))), participant = rep(1:(nrow(data1)/2), 2))) # this just converts bivnorm to long format and adds the genre variable

bivnorm_dat$liking <- as.numeric(bivnorm_dat$liking) # variable was converted to character for some reason in cbind so lets make it numerical again

bivnorm_dat$genre_f <- factor(bivnorm_dat$genre) # make genre a factor

lmer_bivnorm <- lmer(liking ~ genre_f + (1 | participant), bivnorm_dat)

summary(lmer_bivnorm)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre_f + (1 | participant)

## Data: bivnorm_dat

##

## REML criterion at convergence: 136955.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.3987 -0.6185 0.0035 0.6294 3.8687

##

## Random effects:

## Groups Name Variance Std.Dev.

## participant (Intercept) 11.09 3.330

## Residual 45.14 6.719

## Number of obs: 20000, groups: participant, 10000

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 74.98622 0.05802 9999.00005 1292.519 <2e-16 ***

## genre_f1 -0.04320 0.04751 9999.00002 -0.909 0.363

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre_f1 0.000I hope that, in general, you understand the above output, as I assume you are familiar with lme4.

However, let us inspect a few things here:

- The first thing that we might notice is that the Estimate for the effect does actually look smaller than the one from the t-test. This is due to something that we discussed in part III: In the mixed model we use sum-to-zero coding, which shows each group’s deviation from the grand mean (i.e. the mean of pop and rock), which is with two groups, by definition half the total difference between the groups, which is what the t-test shows. We can verify that indeed double the estimate of the mixed model,

-0.04320*2 =-0.0864, which is very close to the0.0863914of the t-test estimate of the group difference. The fact that in one case the estimate is positive, and in the other it is negative, is just because a different reference level is used. If you switch the order of groups in the t-test above, the estimate will get negative as well. - Compared to the t-test, we also get an estimate for the intercept, representing the grand mean, just as we do in normal linear models. This is very close to the 75 mean that we simulated.

- The degrees of freedom are close to the ones from the t-test, BUT, they are a little weird in that they are decimal numbers. Unless you have used mixed models a lot, this might seem odd. However, there are reasons for this (that I do not want to go into too much), but you can see this as an indicator that we get into a complicated domain where the mixed model is not exactly comparable to the t-test, or a repeated-measures ANOVA approach to this data. However, that should not bother us too much at the moment - it suffices to realize that the df is similar to the one from the t-test, i.e. 9999 (

participants per group - 1) in this case.

One thing that might appear odd however, is that, if you look at the output of the mixed-model, we cannot find our simulated standard deviations of 7.5.

Did something go wrong? And where should we find them anyway? Should they be found as the residual variance? Or the participant random effect variance? Or should they be in the standard error of the effect? And where is our correlation of .20?

Let us investigate…

Another correlation-based detour

We have dealt with correlations and simulations extensively in part II, and again, the correlations here play a main role in solving this case.

As we are working with mvrnorm in this case, we supplied the function with a variance-covariance matrix (see part II for more explanation) for the variance, sigma <- matrix(c(rock_sd^2, rock_sd*pop_sd*correlation, rock_sd*pop_sd*correlation, pop_sd^2), ncol = 2), a matrix containing the variances of both groups (rock and pop) and their covariance that looks like this:

As both groups have the same variance there are only 2 different numbers in the matrix, namely the variance of each group, i.e. rock_sd^2 = 56.25 and pop_sd^2 = 56.25 and the covariances between the groups rock_sd*pop_sd*correlation = 11.25 (note that the covariances would be the same even if the group variances were not the same in both groups, as by definition, 2 groups can only have one covariance, i.e. the same formula is used twice in the matrix).

Thus, these two numbers should somewhere to be found in the model output, or not?

Indeed, they are and to find these numbers in the model output, we have to understand what they mean.

Let us have a look at the random effects terms, that capture the variance in components in mixed models:

Participant random variance

The Participant variance in the random effects terms of the model is the variance that we can attribute to participants, in this case it is 11.09.

That this variance is greater than 0 tells us that some variance in liking ratings of pop and rock music can be attributed to participants.

In other words, knowing a participant’s rating of pop music, we will carry some information about a participant’s rating of rock music.

This, as you might realize, sounds suspiciously like a correlation between the liking ratings across participants, and, just as suspiciously 11.09 is very close to the simulated covariance of 11.25 above.

So, might this number represent the correlation between the ratings?

As covariances are just a unstandardized correlation, we can actually check this.

For instance, if from he covariance of 11.25 from the simulation, we want to go back to the underlying correlation, we divide it by the product of the group SDs rock_sd*pop_sd, i.e. 11.25/(7.5*7.5) = 0.2, the specified correlation from above.

In the simulated data, the correlation that we actually observed was 0.1971886 and indeed (if we use the unrounded value of the participant SD that we get with VarCorr(lmer_bivnorm)[[1]][1]) we find: 11.08745/(sd(subset(bivnorm_dat, genre == "rock")$liking)*sd(subset(bivnorm_dat, genre == "pop")$liking))) = 0.1971886.

First riddle solved, the participant random variance is indeed the specified covariance!

Residual random variance

What about the Residual variance in the model output? Why does it not correspond to the other variance component in the matrix above, namely 56.25.

The residual represents the variance that cannot be attributed to any sources that we understand, i.e. sources that we model.

So first of all let us look at how much total variation there is in the data.

The total variance is just the mean variance of all scores in the two groups var(subset(bivnorm_dat, genre == "rock")$liking)+var(subset(bivnorm_dat, genre == "pop")$liking)/2 = 56.2286228.

As mentioned, he residual variance is the part of this variance that we do not know what it can be attributed to.

However, just a minute ago, we already attributed some of the variance to the participants - so we can deduct this variance, as it is not part of the residual anymore:

(var(subset(bivnorm_dat, genre == "rock")$liking)+var(subset(bivnorm_dat, genre == "pop")$liking))/2-11.08745 = 45.14 which is again, exactly what we see in the model.

Some important Remarks on this variance decomposition

It is important to mention a few things here. As you might have realized by now, finding the simulated variances in the model output is not always trivial. When models get more complex, various variances might influence different terms, and in mixed models designs, we often might deal with unbalanced data, where the group sizes are not always equal. Thus, do not expect this method to generalize to more complex designs - this was mainly a demonstration to show that mixed models are not totally “crazy” and give us weird numbers but that the numbers do indeed bear some relation to the more “simple” statistical models we worked with before. However, this meaning might sometimes be more difficult to trace. After all, this is not even a bad thing - we use mixed models to model things that are complicated, and we want the model to figure out what to do with e.g. unbalanced designs etc. If we would just be able to do the same thing with calculating some variances by hand, we would not need a mixed model after all.

An easy way to “break” mixed models

Consider a slightly different assumption about the correlation between liking scores. It is very much possible that a participant who likes rock music will dislike pop music. Thus, knowing a rock music score for a certain participant, we could expect the next score to be somewhat lower and vice versa, i.e. there would be negative correlation between the scores. If we change the above correlation in the simulation to be negative, we will get a rather weird output for the mixed model however:

correlation_neg <- -0.2 # now correlation is negative

sigma_neg <- matrix(c(rock_sd^2, rock_sd*pop_sd*correlation_neg, rock_sd*pop_sd*correlation_neg, pop_sd^2), ncol = 2) # define variance-covariance matrix

set.seed(123)

bivnorm_neg <- data.frame(mvrnorm(nrow(data1)/2, group_means, sigma_neg))

bivnorm_dat_neg <- data.frame(cbind(liking = c(bivnorm_neg$rock, bivnorm_neg$pop), genre = c(rep("rock", (nrow(data1)/2)), rep("pop", (nrow(data1)/2))), participant = rep(1:(nrow(data1)/2), 2)))

bivnorm_dat_neg$liking <- as.numeric(bivnorm_dat_neg$liking)

bivnorm_dat_neg$genre_f <- factor(bivnorm_dat_neg$genre)

lmer_bivnorm_neg <- lmer(liking ~ genre_f + (1 | participant), bivnorm_dat_neg)## boundary (singular) fit: see ?isSingularsummary(lmer_bivnorm_neg)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre_f + (1 | participant)

## Data: bivnorm_dat_neg

##

## REML criterion at convergence: 137352.1

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -4.0155 -0.6738 0.0063 0.6787 3.5617

##

## Random effects:

## Groups Name Variance Std.Dev.

## participant (Intercept) 0.00 0.000

## Residual 56.23 7.499

## Number of obs: 20000, groups: participant, 10000

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 75.04320 0.05302 19998.00000 1415.30 <2e-16 ***

## genre_f1 -0.01378 0.05302 19998.00000 -0.26 0.795

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre_f1 0.000

## optimizer (nloptwrap) convergence code: 0 (OK)

## boundary (singular) fit: see ?isSingularFirst we get a warning about the fit being “singular”. Second, we see that, even though the absolute covariance is just as large this time but negative (-11.25), the model tells us that the covariance of participants is 0!! Can that be right? The correlation is indeed -0.1971886 so using the formula to infer the covariance from the group SDs in the data we would expect

cor(subset(bivnorm_dat_neg, genre == "rock")$liking, subset(bivnorm_dat_neg, genre == "pop")$liking)

*sd(subset(bivnorm_dat_neg, genre == "rock")$liking)

*sd(subset(bivnorm_dat_neg, genre == "pop")$liking)

== 0It is, however, -11.0874483, which is certainly not 0! However, take a moment to reflect on how a variance is defined as the squared standard-deviation, i.e. \(var = sd^2\). By definition, a squared number cannot be negative, and therefore the variance can also never be negative. Mixed models have a built-in restriction that honors this notion. If a covariance is negative, the mixed model gives us the closest number that it assumes reasonable for a random effect variance, namely 0 which is just an SD of 0 squared, and will tell us that the model is “singular” i.e. the variance component cannot be estimated.

Anova procedures do not “suffer” from this problem, as they use a sum-of-squares approach, where the sum-of-squares are always positive. This means that in cases where the observed correlation between the observations is negative, Anovas and mixed models will yield different results:

ez_an <- ez::ezANOVA(bivnorm_dat_neg, dv = .(liking), wid = .(participant), within = .(genre_f), detailed = T)

print(ez_an)## $ANOVA

## Effect DFn DFd SSn SSd F p

## 1 (Intercept) 1 9999 112629624.416931 451366.6 2495053.03358603 0.0000000

## 2 genre_f 1 9999 3.796855 673093.4 0.05640339 0.8122785

## p<.05 ges

## 1 * 0.990114992309

## 2 0.000003376591Which one is the true result though? Both and neither, it is just a design choice that package developers made. As Jake Westfall put it better than I ever could for models that have these negative covariances:

“The best-fitting model is one that implies that several of the random variance components are negative. But lmer() (and most other mixed model programs) constrains the estimates of variance components to be non-negative. This is generally considered a sensible constraint, since variances can of course never truly be negative. However, a consequence of this constraint is that mixed models are unable to accurately represent datasets that feature negative intraclass correlations, that is, datasets where observations from the same cluster are less (rather than more) similar on average than observations drawn randomly from the dataset, and consequently where within-cluster variance substantially exceeds between-cluster variance. Such datasets are perfectly reasonable datasets that one will occasionally come across in the real world (or accidentally simulate!), but they cannot be sensibly described by a variance-components model, because they imply negative variance components. They can however be”non-sensibly" described by such models, if the software will allow it. aov() allows it. lmer() does not".

A second, very important point to mention is that lme4 actually scales the random effect terms by the residual variance when estimating them.

What this means is that, as far as the fitting algorithm is concerned, a model with a random intercept SD of 10 and a residual SD of 20 is exactly identical to a model where the random intercept SD would be 100 and the residual would be 200 or a model where the random intercept SD would be 1 and the residual SD would be 2, at least regarding the random effect structures.

All that the model cares about is that the random intercept is 0.5 times the residual SD.

What this means for us is that when specifying the random effect structure, we do not actually need to keep the scale of the response in mind if that is difficult to do.

In my experience, in my own research where I work a lot with rating scales and/or binary decisions, keeping estimates on the original scale often helps me figuring out what effect sizes I expect but I can imagine that there are many situations in which this is not so easy.

Thus, it is good to know that in those situations you can just think about the residual SD first and then derive how much smaller / bigger you expect the random effects to be relative to the residual.

Back on track: Simulating a DV based on the data-frame row entries

I hope you forgive this rather long detour, but I felt that it was important to clarify how simulations for mixed models relate to simulations for simpler models. In the beginning of this tutorial, I mentioned that we would now use a simulation approach in which we would calculate each value for the DV directly from the other values in the data-frame for each row (i.e. directly from the design matrix). As we want to build more complicated models now, we have to leave the direct group mean simulation approach from before behind and move on to the regression weight simulation approach that I will now describe. It involves writing up the simulation in terms of a linear model notation once more (see part II and part III of this tutorial). This is not entirely new as we used linear models to simulate ANOVA designs before in part III, but what is new is that now we are not simulating groups directly before and use dummy variables for each factor but rather use a pre-baked dataset that entails all information for us to calculate the DV for each row of the dataset.

If we write the above model down in a linear model notation we get:

\[ y_i = \beta_0 + \beta_1genre_i + \epsilon_i \]

In the regression weight simulation approach, we will still start of with thinking about the (group) means. However, we will simulate each observation of the dependent variable \(y_i\) by directly using a regression equation describing the regression model that produces our expected means. Thus, for each row, the value for \(y_i\) (i.e. the liking of the particular song for the particular genre for a particular participant) will be the sum specified above.

The problem that we need to solve is that we need to figure out what \(beta_0\) and \(beta_1\) are to be able to calculate \(y_i\).

In this model, \(\beta_0\) represents the grand mean (mean of all expected group means), which is \(\mu_{grand} = (\mu_{rock}+\mu_{pop})/2 \Rightarrow \mu_{grand} = (75+75)/2 \Rightarrow \beta_0 = 75\)

As we use sum-to-zero coding for factors again (see part III of the tutorial), \(\beta_1\) represents the deviation from \(beta_0\) depending on the respective group.

We start by making genre a factor variable.

data1$genre_f <- factor(data1$genre) # make a new variable for the factor

contrasts(data1$genre_f)## [,1]

## pop 1

## rock -1We see that when genre_f in the data is equal rock, it will assume the value -1 in our linear model, while it will assume the value 1 for pop.

If we put this information in the model we can calculate the \(beta_1\) by filling in one of the group means, for instance \(\mu_{rock}\):

For instance, we can calculate \(\mu_{rock} = \beta_0 + \beta_1*genre_i\)

We specified that \(\mu_{rock} = 75\), and we just calculated that \(\beta_0 = 75\).

Moreover, we know that \(genre_i\) in this case is rock, so in the regression equation it will become -1:

\(75 = 75 + \beta_1*-1\). Solving this equation to \(beta_1\) yields \(0 =\beta_1*-1 \Rightarrow \beta_1 = 0\). This is unsurprisingly representing our initial wish that there should be no difference between group means.

The remaining part of the equation, \(\epsilon\), is the error that we specified above as sigma in mvrnorm.

If we write this down in simulation code we get:

data1$genre_pop <- ifelse(data1$genre == "pop", 1, -1)

b0 <- 75

b1 <- 0

epsilon <- 7.5

set.seed(1)

for(i in 1:nrow(data1)){

data1$liking[i] <- rnorm(1, b0+b1*data1$genre_pop[i], epsilon)

# we simulate a normal distribution here with the mean being the regression equation (except the error term) and the SD being the error term of the regression equation

}

lmem1 <- mixed(liking ~ genre + (1 | participant), data1, method = "S")## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem1)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 | participant)

## Data: data

##

## REML criterion at convergence: 137424.4

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -4.2766 -0.6696 -0.0125 0.6715 3.7953

##

## Random effects:

## Groups Name Variance Std.Dev.

## participant (Intercept) 0.2734 0.5228

## Residual 56.1596 7.4940

## Number of obs: 20000, groups: participant, 10000

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 74.959773 0.053248 9998.985098 1407.756 <2e-16 ***

## genre1 0.008801 0.052990 9998.984622 0.166 0.868

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 0.000You can see that in the above for-loop we do nothing but simulate, from a normal distribution, one observation for each row of the data-set where the specific genre in that row is taken into account.

The mean of the normal distribution for each row is the regression equation defined above, excluding \(\epsilon\), transferred into R-code.

\(\epsilon\) enters the model as the SD of this normal distribution, thereby taking its role as the error term.

All values are just as we would expect. We did not define any random intercept variance in the simulation code above, therefore we do only get very small (simulation variance) values and get a value close to 7.5 for \(\epsilon\).

Building up the random effects structure

Adding a random intercept

However, usually, the reason why we would use mixed models is because we think that there will be covariance. So how do we put our expectation that people who like music more in general will like either genre more into the model using the regression weight simulation approach? We do this by adding another term into the regression equation, that tracks a special value for each participant representing their “general liking of music”. More specifically, it tracks the difference from a specific participant from the mean music liking - their random intercept:

\[ y_{ij} = \beta_0 + u_{0j} + \beta_1genre_{i} + \epsilon_{i} \] As you can see in the equation above, we got a new index \(_j\) for the terms that were in the model previously (by the way beta_0 has no index as it the same for each row and does not depend on other variables). This index tracks the participant number in each row. Thus if \(_j = 1\) we are looking at values of participant 1 - either their liking of rock or pop music. This index is important for the new term \(u\) in the model, that represents the random intercept of the participant, which is their average deviation from the average music liking in the sample. In other words if \(u0_1 = 10\) it means that the average liking of participant 1 for pop and rock music is 10 points higher than the average liking in the sample. As it also represents an intercept (just as \(\beta_0\)) we also add the 0 here in the notation.

If we want to put our correlation of .20 in the simulation again, we can do so via calculating the random SD values that these would represent again (i.e. \(\sqrt{11.25}\) for the random intercept and and \(\sqrt{56.25-11.25}\) for the residual). Next, we can put a new term in the simulation that generates \(J\) different values for \(u0_j\) representing one intercept value for each participant that is applied based on the participant number in each row of our data-set.

b0 <- 75

b1 <- 0

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

U0 = rnorm(length(unique(data1$participant)), 0, sd_u0)

str(U0)## num [1:10000] 0.789 0.821 -2.154 -6.49 3.484 ...This is how we would simulate the random intercept values. We specify the SD of the random intercept values \(sd_{uj}\) and use it to sample from a distribution with mean 0 (the mean of random intercepts is always 0 by definition as they represent a deviation from the mean which, logically, must have a mean of 0) and a standard-deviation of \(sd_{uj}\). We sample 10,000 values from this distribution, 1 for each participant. Next we need to put this into the mean simulation.

set.seed(1)

for(i in 1:nrow(data1)){

data1$liking[i] <- rnorm(1, b0+U0[data1$participant[i]]+b1*data1$genre_pop[i], epsilon)

}

lmem2 <- mixed(liking ~ genre + (1 | participant), data1, method = "S")## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem2)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 | participant)

## Data: data

##

## REML criterion at convergence: 137041.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.5076 -0.6185 -0.0083 0.6163 3.5313

##

## Random effects:

## Groups Name Variance Std.Dev.

## participant (Intercept) 11.65 3.413

## Residual 44.93 6.703

## Number of obs: 20000, groups: participant, 10000

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 74.989359 0.058404 9998.999983 1283.978 <2e-16 ***

## genre1 0.007872 0.047396 9998.999952 0.166 0.868

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 0.000As you can see from the output, we get exactly what we would expect.

Note that it was very easy to add the random intercept to the model, we just added the term U[data1$participant[i]] to the existing 1-liner for the simulation.

If you are unfamiliar with indexing in R, this does nothing more than say that for each row i we want the value from the random intercept list U that belongs to the participant that we see at row i.

To make the random intercept simulation more traceable we can plot it. If we use the code above, but set the error term \(\epsilon\) to a very small number, we will see what influence the random effect has on the data.

epsilon_noerror <- .001

data1_noerror <- subset(data1, participant <= 20) # take only 20 participants for plotting

set.seed(1)

for(i in 1:nrow(data1_noerror)){

data1_noerror$liking[i] <- rnorm(1, b0+U0[data1_noerror$participant[i]]+b1*data1_noerror$genre_pop[i], epsilon_noerror)

}

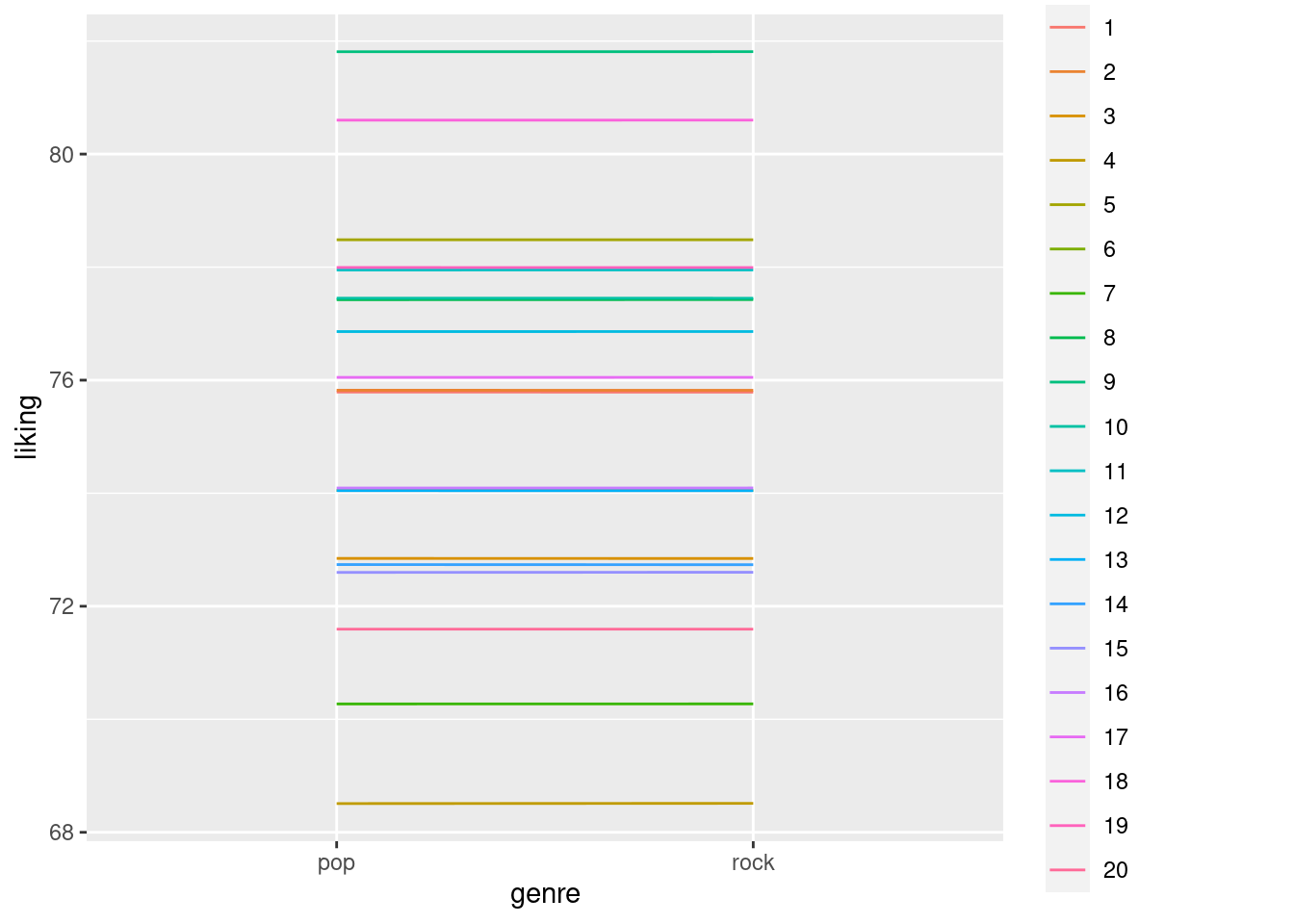

ggplot(data1_noerror, aes(x=genre, y=liking, colour=factor(participant), group = factor(participant))) + geom_line() We can see in the above plot that each participant has exactly no difference in liking for rock and pop music, as \(beta_1\) is 0 and there is no error term that causes random variations.

Still, the likings of each participant differ, as the random intercept causes overall ratings to be lower or higher for different participants.

We can see in the above plot that each participant has exactly no difference in liking for rock and pop music, as \(beta_1\) is 0 and there is no error term that causes random variations.

Still, the likings of each participant differ, as the random intercept causes overall ratings to be lower or higher for different participants.

Adding a random slope

Now, we might have the (reasonable) expectation that, while on average, the difference in liking between the two genres is 0 (represented by our fixed effect \(beta_1\) being 0), different people might still prefer one genre over the other, but that variation is just random in that we do not know where it comes from. Still, it might be there and we might want to model it using a random slope for the genre across participant, tracking, for each participant, what their personal difference from the average genre effect i.e. 0 is. In other word, we now expect that it is rarely the case that each participant likes rock and pop exactly the same. Rather, each participant has a preference but these preferences average out across the sample to be 0. We can add this to the regression equation again:

\[ y_{ij} = \beta_0 + u_{0j} + (\beta_1+u_{1j})\times genre_{i} + \epsilon_{i} \]

this equation denotes the fact the value for genre (1 or -1 representing rock and pop) does not only depend on \(beta_1\) but also the random slope term \(u_{1j}\). As you can see above the terms \(beta_1\) and \(u_{1j}\) are between brackets, so they need to be figured out before multiplying them with the value for genre. However, with the current design, we cannot attempt such a stunt, as each participant provides only 2 ratings (1 song from each genre).

Thus, only 1 possible difference score can be calculated per participant. From this single difference score, it is of course not possible to estimate two distinct parameters, namely the average effect \(beta_0\) across participants as well as the average deviation of each participant from that effect. We can only do this if each participant rates more than 2 songs per genre so that two quantities can be distinguished in the model.

Thus, we will now change our data-set a little, so that each participant provides ratings of not only 1 but 20 songs.

We already did this in data2 in the beginning of this tutorial and will now just add more participants to that data:

data2 <- generate_design(n_participants = 500, n_genres = 2, n_songs = 20) # note that we pass the number of songs per GENRE

str(data2)## 'data.frame': 20000 obs. of 3 variables:

## $ participant: int 1 2 3 4 5 6 7 8 9 10 ...

## $ genre : chr "rock" "rock" "rock" "rock" ...

## $ song : chr "rock_1" "rock_1" "rock_1" "rock_1" ...

## - attr(*, "out.attrs")=List of 2

## ..$ dim : Named int [1:3] 500 2 20

## .. ..- attr(*, "names")= chr [1:3] "participant" "genre" "song"

## ..$ dimnames:List of 3

## .. ..$ participant: chr [1:500] "participant= 1" "participant= 2" "participant= 3" "participant= 4" ...

## .. ..$ genre : chr [1:2] "genre=1" "genre=2"

## .. ..$ song : chr [1:20] "song= 1" "song= 2" "song= 3" "song= 4" ...We have 500 participants now in data2, so that the overall number of observations is the same as in data1 for now.

Now, how big do we think that the random slope should be? The number should represent our expectation about the range that any given person’s difference in liking between rock and pop music should be in. For instance, if we take the normal distribution quantities and use a 2-SD rule, we could think that 95% of people should not have a difference in the 2 likings stronger than 10 points. If we divide that by 2, we get 5 as an sd for the random slope.

data2$genre_pop <- ifelse(data2$genre == "pop", 1, -1)

b0 <- 75

b1 <- 0

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

U0 = rnorm(length(unique(data2$participant)), 0, sd_u0)

sd_u1 = 5

U1 = rnorm(length(unique(data2$participant)), 0, sd_u1)

str(U1)## num [1:500] -2.092 1.776 2.567 0.093 6.592 ...The above code shows that, again, we get 1 score per participant for the random intercept and random slope.

set.seed(1)

for(i in 1:nrow(data2)){

data2$liking[i] <- rnorm(1,

b0+U0[data2$participant[i]]+

(b1+U1[data2$participant[i]])*data2$genre_pop[i],

epsilon)

}

lmem3 <- mixed(liking ~ genre + (1 + genre | participant), data2, method = "S", control = lmerControl(optimizer = "bobyqa"))## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem3)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 + genre | participant)

## Data: data

## Control: lmerControl(optimizer = "bobyqa")

##

## REML criterion at convergence: 135817.2

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.8360 -0.6544 -0.0134 0.6536 3.5644

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## participant (Intercept) 11.42 3.379

## genre1 28.33 5.323 -0.03

## Residual 45.21 6.724

## Number of obs: 20000, groups: participant, 500

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 74.9965 0.1584 499.0000 473.407 <2e-16 ***

## genre1 -0.2564 0.2427 498.9999 -1.056 0.291

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 -0.025Unsurprisingly, the output above shows the amount of random slope variance that we intended to simulate.

Again we can plot this combination of random slope + intercept.

data2_noerror <- subset(data2, participant <= 20) # take only 20 participants for plotting

set.seed(1)

for(i in 1:nrow(data2_noerror)){

data2_noerror$liking[i] <- rnorm(1,

b0+U0[data2_noerror$participant[i]]+

(b1+U1[data2_noerror$participant[i]])*data2_noerror$genre_pop[i],

epsilon_noerror)

}

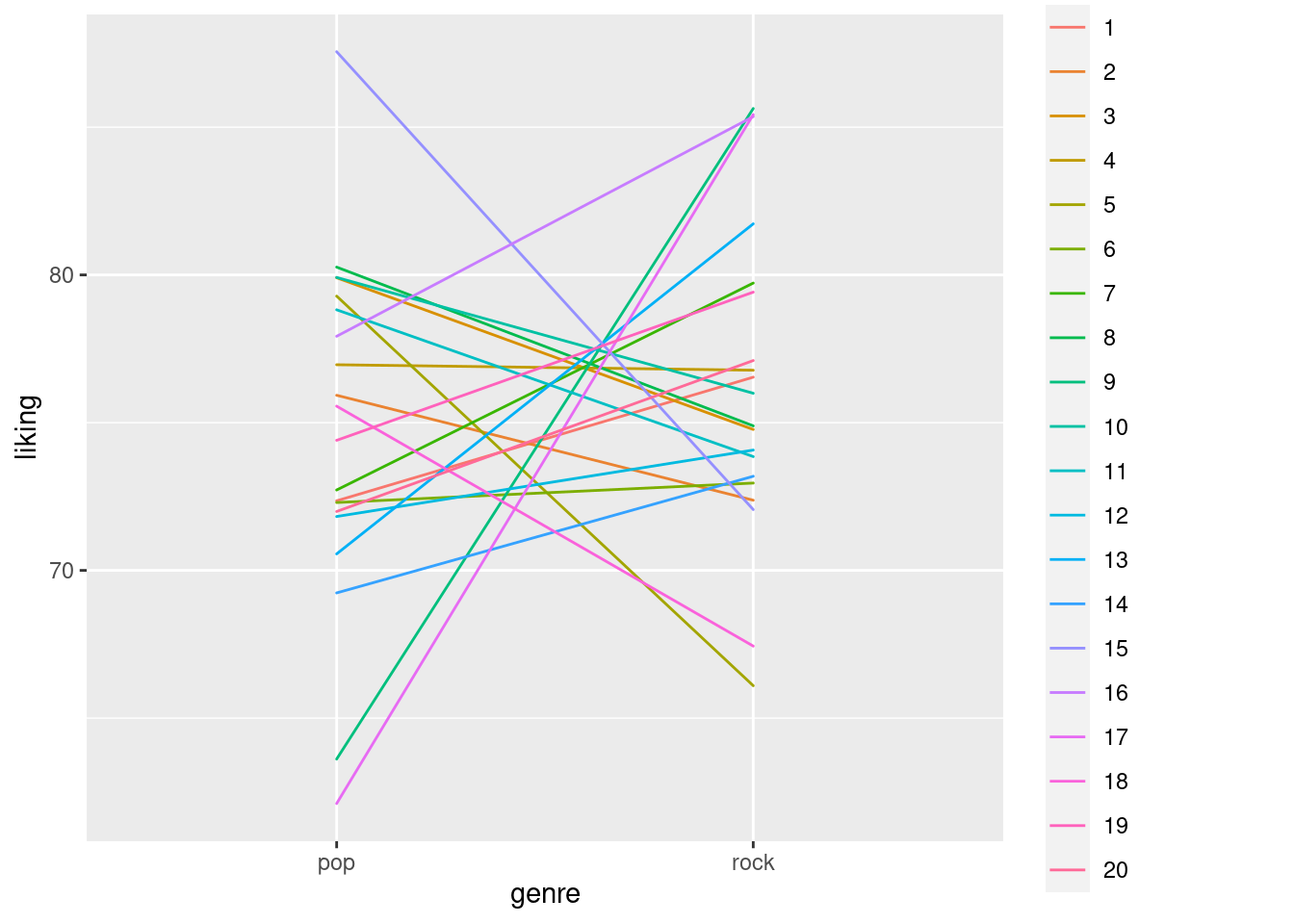

ggplot(data2_noerror, aes(x=genre, y=liking, colour=factor(participant), group = factor(participant))) + geom_line() We can now see that not only does each participant still have a different mean, they also have do not have flat slopes anymore, but can have even large differences in their liking for pop vs. rock music.

However, across all participants the difference is still zero.

We can now see that not only does each participant still have a different mean, they also have do not have flat slopes anymore, but can have even large differences in their liking for pop vs. rock music.

However, across all participants the difference is still zero.

Adding random term correlations

We can see that in the above model output, we get a small number next to the random slope. This number -0.03 is the correlation between the random terms. It means that if the random intercept for a specific participant is high, the slope would also be highly positive and vice versa. In our situation, this correlation can be helpful in addressing the problem that in order to have a really high random intercept, you should have very high likings for both genres. For instance, a person can only have an average liking of 100, if they have a liking of 100 for both, rock and pop. Thus, we can expect a negative correlation between the random intercept and slope, where high liking (high random intercepts) is related to lower differences in liking (lower random slopes).

Luckily, we do not have to use any new tricks here, we can just make use of the mvrnorm function again, but this time, instead of using it to simulate correlated groups, we will use it for the simulation of the random terms \(u_{0j}\) and \(u_{1j}\).

We could, for instance assume a negative correlation of -.20

set.seed(1)

b0 <- 75

b1 <- 0

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

sd_u1 <- 5

corr_u01 <- -.20

sigma_u01 <- matrix(c(sd_u0^2, sd_u0*sd_u1*corr_u01, sd_u0*sd_u1*corr_u01, sd_u1^2), ncol = 2)

U01 <- mvrnorm(length(unique(data2$participant)),c(0,0),sigma_u01)

str(U01)## num [1:500, 1:2] 0.472 0.726 4.686 -1.858 -3.504 ...

## - attr(*, "dimnames")=List of 2

## ..$ : NULL

## ..$ : NULLcor(U01)[2]## [1] -0.1446309We can see that we got a matrix with 2 rows of 500 observations each and that their correlation is cor(U01)[2] = -0.1446309, which is not exactly what we wanted, but relatively close.

The difference is due to the fact that for the correlation, there are only 500 pairs of scores that can be used to estimate it and it is therefore relatively unstable (try increasing the size of U01 to 10,000 observations for example and you will see that it is much closer to what we would expect).

Now, we have to put this into the model again.

for(i in 1:nrow(data2)){

data2$liking[i] <- rnorm(1,

b0+U01[data2$participant[i], 1]+

(b1+U01[data2$participant[i], 2])*data2$genre_pop[i],

epsilon)

}

lmem4 <- mixed(liking ~ genre + (1 + genre | participant), data2, method = "S", control = lmerControl(optimizer = "bobyqa"))## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem4)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 + genre | participant)

## Data: data

## Control: lmerControl(optimizer = "bobyqa")

##

## REML criterion at convergence: 135757.9

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.9311 -0.6568 -0.0113 0.6562 3.6397

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## participant (Intercept) 12.18 3.489

## genre1 25.71 5.070 -0.16

## Residual 45.13 6.718

## Number of obs: 20000, groups: participant, 500

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 75.1087 0.1631 499.0003 460.45 <2e-16 ***

## genre1 0.1299 0.2317 498.9998 0.56 0.575

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 -0.153As you can see, the only thing that changed here is that we are using the matrix of random intercept and slope values here for the simulation here by assessing it at column 1 (U01[data2$participant[i], 1]) for the random intercepts and column 2 (U01[data2$participant[i], 2]) for the random slopes.

Adding a crossed random effects term.

It is possible, that some songs are universally more liked across people than others.

For example if we imagine for a moment that the song pop_1 in our data-set represents, for example Blank Space by Taylor Swift - obviously an amazing song that most people will like.

Similarly, universally liked songs might be in the rock genre and also similarly, there might be songs in both genres that most people do not like so much (remember though that we are working with a “most liked” playlist from Spotify so it makes sense that regardless our overall mean of 75 stays the same).

Thus, it is not only the fact that people differ in their general liking of music, but also that songs differ in their propensity to be liked. To represent this we can add another random intercept representing songs:

\[ y_{ijk} = \beta_0 + u_{0j} + w_{0k} + (\beta_1+u_{1j})\times genre_{i} + \epsilon_{i} \] The new term \(w_{0k}\) is doing just what I described above, and is very similar to \(u_{0j}\) but it represents the term \(w\), a term that tracks the deviation from the overall liking scores for each given song \(_k\).

We can, for instance, assume that the random intercept for songs will be half the size of random intercepts across people, making the assumption that differences between people are often larger than differences between things, in this case songs.

sd_w0 <- sqrt(11.25)/2

W0 <- rnorm(length(unique(data2$song)), 0, sd_w0) # note how we use the number of songs here to specify the size of W0 instead of the participant numberWe can now just add this to the simulation of the DV again and add the random intercept to the lmer syntax.

Note that in the code below, we need a way to index to the right song for each value in W0.

For participants this was easy, as participant numbers are just numerals from 1-J that automatically also represent index values.

For songs however, the syntax data2$song[i] will not return a number, but, for instance rock_1, as this is the song ID.

We could either covert song IDs to numbers as well, or we can use syntax that retrieves the index of, for example rock_1 in the list of unique songs.

This is what the slightly confusing W0[which(unique_songs == data2$song[i])] syntax does.

Note that we create unique_songs outside of the loop, because creating the list of unique songs causes R to do a search across the entire data-set for unique song names, and it suffices to do this once instead of every iteration of the loop.

You will note that the code will run significantly slower if you put it inside of the loop.

unique_songs <- unique(data2$song)

for(i in 1:nrow(data2)){

data2$liking[i] <- rnorm(1,

b0+U01[data2$participant[i], 1] +

W0[which(unique_songs == data2$song[i])] +

(b1+U01[data2$participant[i], 2])*data2$genre_pop[i],

epsilon)

}

lmem5 <- mixed(liking ~ genre + (1 + genre | participant) + (1 | song), data2, method = "S", control = lmerControl(optimizer = "bobyqa"))## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem5)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 + genre | participant) + (1 | song)

## Data: data

## Control: lmerControl(optimizer = "bobyqa")

##

## REML criterion at convergence: 135951.7

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.9684 -0.6629 0.0116 0.6474 3.4745

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## participant (Intercept) 12.47 3.531

## genre1 25.39 5.038 -0.13

## song (Intercept) 5.20 2.280

## Residual 45.21 6.724

## Number of obs: 20000, groups: participant, 500; song, 40

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 75.0514 0.3965 53.5030 189.291 <2e-16 ***

## genre1 0.3312 0.4278 71.8909 0.774 0.441

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 -0.028We can see that the random intercept SD is slightly higher than we wanted to simulate, but this is not surprising given that there are only 40 different songs that the random intercept SD is simulated over. If we would increase the number of songs to, say 10,000, the value would be much closer to what we expect. However, if we would want to keep the number of participants the same we would also have a data-set of 5,000,000 rows, and it would take ages to estimate, and it is also not a realistic design to have people listen to 10,000 songs each.

Should we add the genre random slope for songs as well? Try to think about this for a second. What would it mean to add the random slope for genre across songs here? It would mean that we would expect a certain song to differ in its propensity to be liked as a pop song compared to being liked as a rock song. Clearly, this does not make sense as a song is either rock or pop but cannot “switch” genre from rock to pop or vice versa. Thus, genre is a within-participant factor here but it is between-songs meaning that we cannot add a random slope here as it does not make sense.

Adding a second-order (nested) random term

It could be possible that the liking that people show for music also depend on what country they live in as music could be more or less important in a culture. To address this, we could add a random term that identifies different countries. For instance, we could assume that our 500 participants in this simulation come from 5 different countries (I am not qualified to make any actual statements about which countries would show which effect and do not want to imply things only for the sake of an example so I will just call the countries Country A - Country E). We can also assume that the countries are of different size so that they will not be equally represented by the 500 people in our sample.

country_list <- sample(c("A", "B", "C", "D", "E"),size = length(unique(data2$participant)), prob = c(0.25, 0.15, 0.05, 0.35, 0.2), replace = T)

table(country_list)## country_list

## A B C D E

## 133 64 20 173 110Using the sample command, we sampled a list that contains 500 letters from A-E representing countries, one for each participant.

The countries relative size is defined by the prob argument (e.g. country A is assumed to have 5 times the population of country C).

We can now add these countries to the data-frame:

for(i in 1:nrow(data2)){

data2$country[i] <- country_list[data2$participant[i]]

}Above, I mentioned that we are working with a nested rather than a crossed effect here. However, for simulation, this does actually not matter much, because, as Robert Long mentions: “Nesting is a property of the data, or rather the experimental design, not the model.” For us, this means that we can add nested random effects just as we can add crossed effects. However, it might make sense (depending on the situation) to make nested effects’ size depend on each other. For instance, we might think of it like this: knowing the country that a person is from already tells us something about their music liking. Having this information does not explain previously unexplained variance, because the variance was already there, but it was attributed to the person not knowing what “caused” it. For instance, we could assume that 10% of the variance that is no attributed to the random intercept of participants can actually be attributed to country.

Thus we could decrease the size of the random terms for participant by 10 percent and move that 10 percent variance to the random term for country.

The country random term CU01 will then look like this:

sd_cu0 <- sd_u0*0.1

sd_cu1 <- sd_u1*0.1

corr_u01 <- -.10

sigma_cu01 <- matrix(c(sd_cu0^2, sd_cu0*sd_cu1*corr_u01, sd_cu0*sd_cu1*corr_u01, sd_cu1^2), ncol = 2)

CU01 <- mvrnorm(length(unique(data2$country)),c(0,0),sigma_cu01)

cor(CU01)## [,1] [,2]

## [1,] 1.000000 -0.560638

## [2,] -0.560638 1.000000and the participant part is cut down by 10% like this:

set.seed(1)

sd_u0 <- sd_u0*0.9

sd_u1 <- sd_u1*0.9

corr_u01 <- -.20

sigma_u01 <- matrix(c(sd_u0^2, sd_u0*sd_u1*corr_u01, sd_u0*sd_u1*corr_u01, sd_u1^2), ncol = 2)

U01 <- mvrnorm(length(unique(data2$participant)),c(0,0),sigma_u01)and we model it like this:

unique_songs <- unique(data2$song)

unique_countries <- unique(data2$country)

for(i in 1:nrow(data2)){

data2$liking[i] <- rnorm(1,

b0+CU01[which(unique_countries == data2$country[i]), 1]

+U01[data2$participant[i], 1] +

W0[which(unique_songs == data2$song[i])] +

(b1+U01[data2$participant[i], 2]+

CU01[which(unique_countries == data2$country[i]),2])

*data2$genre_pop[i],

epsilon)

}

lmem6 <- mixed(liking ~ genre + (1 + genre | country/participant)

+ (1 | song), data2, method = "S", control = lmerControl(optimizer = "bobyqa"))## Fitting one lmer() model.## boundary (singular) fit: see ?isSingular## [DONE]

## Calculating p-values. [DONE]summary(lmem6)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + (1 + genre | country/participant) + (1 | song)

## Data: data

## Control: lmerControl(optimizer = "bobyqa")

##

## REML criterion at convergence: 135718.5

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.9376 -0.6575 -0.0110 0.6574 3.6663

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## participant:country (Intercept) 9.8338 3.1359

## genre1 20.6720 4.5466 -0.16

## song (Intercept) 4.7068 2.1695

## country (Intercept) 0.0422 0.2054

## genre1 0.1831 0.4279 -1.00

## Residual 45.1516 6.7195

## Number of obs: 20000, groups: participant:country, 500; song, 40; country, 5

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 75.2837 0.3868 40.4651 194.650 <2e-16 ***

## genre1 0.0941 0.4523 20.7557 0.208 0.837

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr)

## genre1 -0.146

## optimizer (bobyqa) convergence code: 0 (OK)

## boundary (singular) fit: see ?isSingularWe can see that the model shows a singularity warning in this case which might be due to the fact that A) the random intercept term for country is very small compared to the other terms and B) the random correlation for the country intercept and slope is estimated based on only 5 pairs of observations (1 for each country), which is obviously very little information.

I wanted to demonstrate how such a nested effect can be added but will now continue without it, as it causes convergence problems here and I want to move on to models with more fixed effects now, and restore the 100% random effect for participants from above.

set.seed(1)

b0 <- 75

b1 <- 0

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

sd_u1 <- 5

corr_u01 <- -.20

sigma_u01 <- matrix(c(sd_u0^2, sd_u0*sd_u1*corr_u01, sd_u0*sd_u1*corr_u01, sd_u1^2), ncol = 2)

U01 <- mvrnorm(length(unique(data2$participant)),c(0,0),sigma_u01)

sd_w0 <- sqrt(11.25)/2

W0 <- rnorm(length(unique(data2$song)), 0, sd_w0) # note how we use the number of songs here to specify the size of W0 instead of the participant numberAdding more fixed effects

Above, we have seen how to simulate random intercepts and slopes. Together with the techniques that we learned in part III of this tutorial, where we already worked with mixed (between x within + covariate) designs, we should be able to build most of the models we work with now. However, we still haven’t done a proper power analysis yet (given that we did not expect a difference between genres above that would also have been pointless anyway), and will therefore add a few other terms to the model on which we will build the power analysis.

Of course, even though in the general population, people like pop and rock equally, there might still be factors that help us to predict a particular person’s liking for music. For instance, people who play an instrument might like music more in general. If we want to add this factor to our model, we will have to grant some of our participants in the ability to play an instrument. Now, the percentage of people playing an instrument is probably lower than 50%. Thus, we should not just make a 50-50 split but come up with some more realistic number. For instance, I would expect that 1 out of 5 (or 20%) of people plays an instrument. However, because it is not a factor that we are manipulating, the number of people playing an instrument in any particular sample will not be 20% but might be more or less close to that number. This is an important point - we could just always grant exactly 20% of our sample, e.g. 20 out of 100 participants, the ability to play an instrument. However, this would not keep in mind that we only expect 20% of the population to play an instrument and in a sample we should expect sampling variance regarding this number. Thus, instead of granting exactly 20% of people this ability, we will sample the probability that someone is playing an instrument with a probability of 20%.

generate_design <- function(n_participants, n_genres, n_songs, prop_instrument = .20){

design_matrix <- expand.grid(participant = 1:n_participants, genre = 1:n_genres, song = 1:n_songs)

design_matrix$genre <- ifelse(design_matrix$genre ==1, "rock", "pop")

design_matrix$song <- paste0(design_matrix$genre, "_", design_matrix$song)

instrument_players <- sample(c("yes", "no"), n_participants, prob = c(prop_instrument, (1-prop_instrument)), replace = T) # sample whether people play instrument

for(i in 1:nrow(design_matrix)){ # grant people the ability to play instrument

design_matrix$instrument[i] <- instrument_players[design_matrix$participant[i]]

}

return(design_matrix)

}

data3 <- generate_design(n_participants = 500, n_genres = 2, n_songs = 20, prop_instrument = .20)

data3$genre_pop <- ifelse(data3$genre == "pop", 1, -1)

data3$instrument_yes <- ifelse(data3$instrument == "yes", 1, -1)

mean(data3$instrument == "yes")## [1] 0.208In this case, 20.6 percent of our 500 participants play an instrument, which is close to 20% as the sample-size is so large.

Now what does this additional effect imply for the random effect structure: Playing an instrument is obviously a between-subject factor, but it is, in fact, a within-song factor, as for each song, there are people who do and do not play an instrument and who are listening to it. Thus, we can estimate a random slope for instrument within songs, representing the idea that between different songs, the difference in liking between people who do and do not play instruments might differ. For example, if a song that has very sophisticated guitar-playing, the difference in liking between instrument-players and non-players might be very heavy, while for other songs that have average amounts of sophistication, the difference in liking might be relatively small. Thus, we will keep what we did above when adding a crossed random intercept, but now simulate it with a variance-covariance matrix just as we did for the random effects for participant above.

We also need to specify how big we expect that instrument effect to be. As a sum-to-zero coded effect, we could set it to 2.5 so that playing an instrument increases the average liking of music by 5 points compared to non-players.

set.seed(1)

b0 <- 75

b1 <- 0

b2 <- 2.5 # the instrument effect

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

sd_u1 <- 5

corr_u01 <- -.20

sigma_u01 <- matrix(c(sd_u0^2, sd_u0*sd_u1*corr_u01, sd_u0*sd_u1*corr_u01, sd_u1^2), ncol = 2)

U01 <- mvrnorm(length(unique(data2$participant)),c(0,0),sigma_u01)

sd_w0 <- sqrt(11.25)/2

sd_w1 <- sd_w0/4

corr_w01 <- .10

sigma_w01 <- matrix(c(sd_w0^2, sd_w0*sd_w1*corr_w01, sd_u0*sd_w1*corr_u01, sd_w1^2), ncol = 2)

W01 <- mvrnorm(length(unique(data2$song)),c(0,0),sigma_w01)As you can see, we now have a random-effects variance-covariance matrix for the song effects as well. I set the correlation to be very small in this case, but for no particular reason (I think it does not matter much). Moreover, the random slope is 0.25x the size of the random intercept SD, as I do not expect the aforementioned instrument effect to be very big.

The only thing left is to simulate the DV and estimate the model:

unique_songs <- unique(data3$song)

for(i in 1:nrow(data3)){

data3$liking[i] <- rnorm(1,

b0+ # fixed intercept (average liking)

U01[data3$participant[i], 1] + # random intercept for participants

W01[which(unique_songs == data3$song[i]), 1] + # random intercept term for song

(b1+ # fixed effect of genre (which is 0)

U01[data3$participant[i], 2]) # random slope for genre across participants

*data3$genre_pop[i] # for each row whether its pop or rock

+(b2+ # fixed effect of instrument

W01[which(unique_songs == data3$song[i]), 2])

*data3$instrument_yes[i]# random slope for instrument across songs

, epsilon) # residual SD

}

data3$genre <- factor(data3$genre)

data3$instrument <- factor(data3$instrument, levels = c("yes", "no")) # explicitly set yes to be 1 and no to be 0

lmem7 <- mixed(liking ~ genre + instrument + (1 + genre | participant) + (1 + instrument | song), data3, method = "S", control = lmerControl(optimizer = "bobyqa"))## Fitting one lmer() model. [DONE]

## Calculating p-values. [DONE]summary(lmem7)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: liking ~ genre + instrument + (1 + genre | participant) + (1 +

## instrument | song)

## Data: data

## Control: lmerControl(optimizer = "bobyqa")

##

## REML criterion at convergence: 135963.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.9185 -0.6525 -0.0122 0.6531 3.5999

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## participant (Intercept) 12.6255 3.5532

## genre1 25.5115 5.0509 -0.16

## song (Intercept) 2.6381 1.6242

## instrument1 0.1525 0.3905 0.00

## Residual 45.2161 6.7243

## Number of obs: 20000, groups: participant, 500; song, 40

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 75.28328 0.32770 88.67272 229.735 <2e-16 ***

## genre1 -0.04608 0.34708 109.64759 -0.133 0.895

## instrument1 2.50729 0.21125 416.01733 11.869 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr) genre1

## genre1 -0.053

## instrument1 0.345 -0.003As we can see, everything simulated as we wanted and would expect - very nice!

Finally! Mixed Effects power!

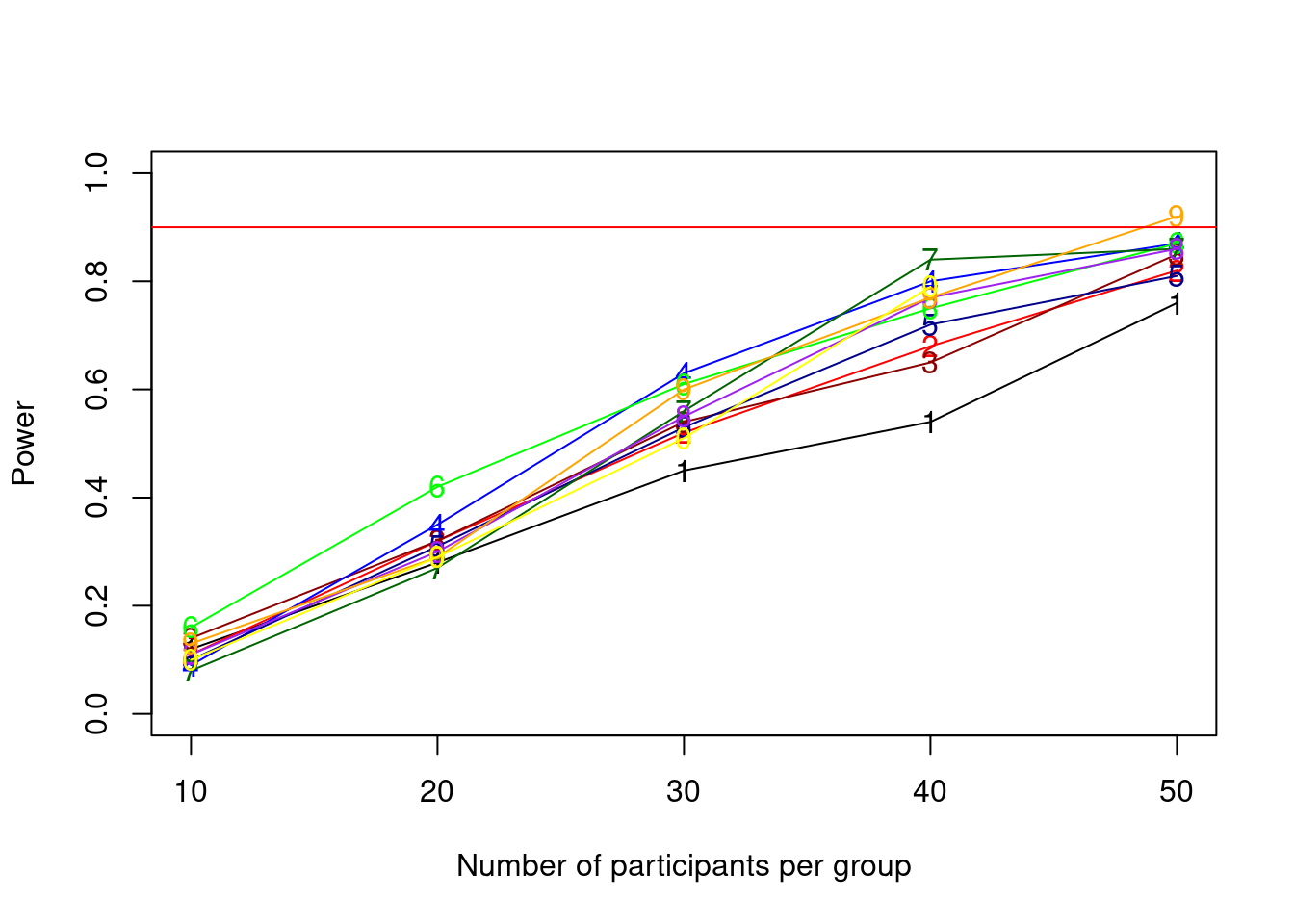

Let us wrap this tutorial up. It is time to put this into a loop now and do a proper power analysis. With our design above, it is possible to increase the sample size in 2 ways:

- We can increase the number of participants

- We can increase the number of songs per participant

Normally, what increases power faster is to increase the number of participants, as there is often more variance between participants than between items such as songs. However, this depends on the specific research question and assumptions, so it remains a domain-knowledge question, that you as an expert in your field need to consider. It is also a question of resources: If a trial is relatively short, adding more trials might not hurt you much in terms of time and budget, but if a trial takes long or costly, it might be wise to not add unnecessary trials.

Regardless, we can of course run a simulation where we increase both, which is what we do in the following. I assume here that the maximum number of songs that we can have people listen to is 100, as we are working with a top100 list and there are just not more songs in there. Moreover, we will cap the number of participants at 500.

First, we define a matrix pp_song_mat that uses expand.grid to create the possible combinations of participant-number and song-number.

It is important here that song-number is the first argument, because we want to increase that first before we increase the number of participants, as we will use the row-number of this matrix to get the respective song and participant number in the power-analysis loop.

To make this a little bit clearer lets first see what we need before the loop, which are all the things that are fixed across simulations:

pp_song_mat <- expand.grid(song = seq(10, 100, by = 10), participant = seq(10, 500, by = 10)) # matrix containing all the different combinations of song number and participants

pp_song_mat <- data.frame(rbind(c(-999,-999), pp_song_mat)) # extra row for first iteration

pp_song_mat$power <- rep(-999)

pp_song_mat$p_singular <- rep(-999)

pp_song_mat$p_nonconv <- rep(-999)

pp_song_mat$skips_at_n <- rep(-999)

pp_song_mat$control_row <- rep(-999)

n_sims <- 100 # we want 100 simulations here which will already be 50,000 models to fit if power does not reach .90 before that as we have 500 combinations of songs-number and participant number

p_vals <- c()

# power_at_n <- c(0) # this vector will contain the power for each sample-size (it needs the initial 0 for the while-loop to work)

n <- 1

power_crit <- .90

alpha <- .005

b0 <- 75

b1 <- 0

b2 <- 2.5

sd_u0 <- sqrt(11.25)

epsilon <- sqrt(56.25-11.25)

sd_u1 <- 5

corr_u01 <- -.20

sigma_u01 <- matrix(c(sd_u0^2, sd_u0*sd_u1*corr_u01, sd_u0*sd_u1*corr_u01, sd_u1^2), ncol = 2)

sd_w0 <- sqrt(11.25)/2

sd_w1 <- sd_w0/4

corr_w01 <- .10

sigma_w01 <- matrix(c(sd_w0^2, sd_w0*sd_w1*corr_w01, sd_u0*sd_w1*corr_u01, sd_w1^2), ncol = 2)

# some additional stuff we need

is_singular <- c() # this will be used to check how many models are singular fits

is_nonconv <- c() # this will be used to check how many models did not convergeWhat we define here is what we always defined in the previous power analyses (critical power, alpha, loop iterators etc.) and the exact same model parameters that we defined above.